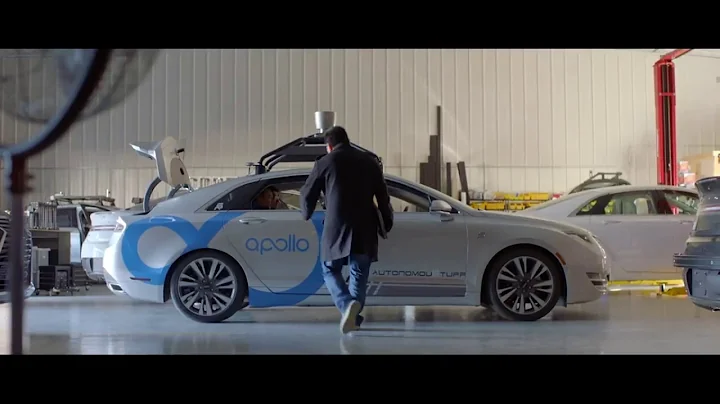

Recently, a research team from the University of Illinois at Urbana-Champaign has developed a new fault assessment technology for autonomous driving. They first conducted a software fault assessment on Baidu Apollo 3.0 and NVIDIA’s proprietary autonomous driving system DriveAV.

The results showed that the team found 561 critical safety faults within 4 hours. Among them, Baidu Apollo 3.0 and Nvidia did not explain the specific faults.

In response to this incident, Baidu issued a statement at the first time, saying, “It attaches great importance to the module abnormalities found in the test and will continue to optimize. The operation of the Apollo autopilot system depends on the collaborative work of the software and hardware modules to complete the automatic The safe operation of driving. In the new FI test, it also relies on the multi-module software and hardware coordination mechanism to avoid the actual occurrence of risks.”

From Baidu’s response, it can be seen that pure software failures cannot make automatic driving. A system safety accident occurred in the car.

In addition to Baidu's response, in terms of the feasibility of the incident itself on the car side, in fact, there are many places for discussion.

Through the paper, it can be understood that the research put Apollo's code on a virtual container (Docker) on a server for testing, which caused the injection failure to be unable to resolve.

Here we need to know that a prerequisite is that fault injection is for the system, while Baidu’s real system is a combination of software and hardware. Apollo code runs on hardware that complies with vehicle regulations and functional safety, even if the fault is injected. problem appear.

At the same time, in the actual driving process, once the Apollo redundant system finds that the vehicle speed is abnormal, it will not perform the action of deep stepping on the accelerator, and there will be no risk of rear-end collision.

In addition, the artificial modification of the control output of the autopilot in the paper causes the accelerator pedal to be slammed, and this situation does not happen on real safety hardware.

According to our actual driving experience, in most cases, even if the car moves forward a bit, it will not hit the car in front.

An extreme CASE can be found in this paper, that is, the car is following the car in front with a safe distance. While the speed is changed and the car accelerates forward, if there happens to be a car from the side lane When the line is merged, the safety distance will suddenly become shorter, and then the car will crash.

However, the lane changing car is solely responsible for such an accident.

In this regard, relevant Baidu personnel also gave the same explanation, “Because on the real hardware, this part of the code runs on an ASIL-D safe MCU. This kind of safe MCU has two processors. The same calculation, related verification, it changes the memory of one processor and makes it calculate the error. This will be different from the value of the other processor. It is immediately found and will not be output at all."

Auto-driving related safety experts also commented on this incident. They believe that the "fault injection" research method is to implant faults in some locations. If the scene causes an accident, the implanted scene is counted as a'Fault in the research' '. "

That is to say, if the brake is damaged during the test, which leads to an accident, the brake failure is a "Fault".

In fact, in July this year, researchers from the University of Illinois at Urbana-Champaign published the title " According to the paper in ML-based Fault Injection for Autonomous Vehicles: A Case for Bayesian Fault Injection, the researchers conducted two FI tests on Baidu Apollo and NVIDIA.

Among them, in traditional fault injection (FI) Below, the Apollo code and parameters did not have any problems, and the performance was stable.

In this autonomous driving failure assessment incident, we saw that researchers at the University of Illinois at Urbana-Champaign wanted to verify the autonomous driving system through failure assessment methods However, due to excessive manual modification of the evaluation and simulation test results, the public misunderstood it.

It is true that the rights and interests of evaluation agencies and enterprises should be respected, and to discern the responsibilities among them, we need to proceed from reality and take a rational view of the problems that arise in autonomous vehicles.

The following is Baidu Apollo's complete response:

We are concerned about the recent interest and use of Apollo open platform by researchers at the University of Illinois at Urbana-Champaign. We are getting in touch with each other for more information.

According to the paper, the researchers performed two FI tests on Apollo. Under the traditional fault injection (FI), the Apollo code and parameters did not have any problems, and the performance was stable. Thank the school's researchers for their recognition of the Apollo platform. The new type of FI uses deliberate changes to codes and parameters to cause serious or warning errors, which can help autonomous driving better discover safety risks.

The operation of the Apollo automatic driving system relies on the cooperation of software and hardware modules to complete the safe operation of automatic driving. We have a multi-module software and hardware coordination mechanism for module failures in the new FI test of this branch to avoid actual risks. In the new FI test, the touch accelerator control signal output in the code is artificially modified into a deep accelerator. In the actual process, once the Apollo redundant system finds that the vehicle speed is abnormal, it will not perform a deep pedal action, and there will be no similar rear-end collision risk.

But even if multi-module coordination and software and hardware redundancy can avoid safety risks, we still attach great importance to the module anomalies discovered by FI and continue to optimize.

As far as the open source platform itself is concerned, we are happy to see that the platform has brought convenience and possibilities to everyone's research and development. Through open source code, we discover and solve problems together. Usually in a period of rapid technological development, such as the Apollo open source system, with the help of global developers, it will be safer than a closed system. This is also the purpose and significance of our open source.

Apollo continues to iterate every day, making progress towards being safer, more reliable, and more complete. Apollo also hopes to gather everyone's strength to jointly advance the human dream and progress.