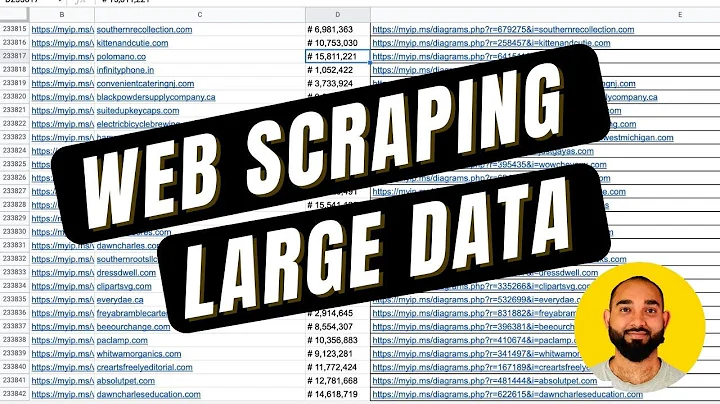

The tool I want to share is a Chrome plug-in called: Web Scraper, which is a Chrome web data extraction plug-in that can extract data from web pages. In a sense, you can also use as a crawler tool .

is also because I recently sorted out some tags of 36krypton articles, and planned to see what standards other websites and venture capital-related websites have to refer to, so I found a website called "Enox Data", which provides a set of The "Industry System" tag is of great reference value. I want to capture the data on the page and integrate it into our own tag library, as shown in the red letter part of the following figure:

If It is the data displayed in rules, and you can select it with the mouse and copy and paste it, but you still have to think of some way to embed it in the page. At this time, I remembered that I had installed Web Scraper before, so I tried it. It was quite easy to use, and the collection efficiency was improved at once. I also give you Amway~

Web Scraper, the Chrome plug-in. I saw it in a three-class public class a year ago. It is a black technology that claims to be able to crawl crawlers without knowing programming, but it looks like three classes. I can't find it on the official website. You can Baidu: "Three-course crawler", and you can still find it. The name is "Data Crawler Course That Everyone Can Learn", but it seems to have to pay 100 yuan. I think this thing can be learned by reading articles on the Internet. For example, my article ~

In simple terms, Web Scraper is a Chrome-based web page element parser, which can be achieved through visual click operations. The data/elements of the customized area are extracted from . At the same time, it also provides a timing automatic extraction function, which can be used as a set of simple crawler tools.

Here, by the way, I will explain the difference between web page extractor crawling and real code writing crawler. The process of automatically extracting page data with web page extractor is a bit similar to a robot that simulates manual clicks. It lets you define the page first. Which element to grab, and which pages to grab, and then let the machine operate on behalf of people; and if you use Python to write a crawler, more is to use the web page request command to download the entire web page first, and then use the code to parse the HTML page elements , Extract the content you want, and keep looping. In comparison, using code will be more flexible, but the cost of parsing will be higher. If it is a simple page content extraction, I also recommend using Web Scraper.

Regarding the specific installation process of Web Scraper and how to use the complete function, I will not discuss it in today's article. The first is that I only used what I need, and the second is that there are so many Web Scraper tutorials on the market that you can find it yourself.

Here is just a practical process, to give you a brief introduction to how I use it.

The first step is to create a Sitemap

Open the Chrome browser, press F12 to call up the developer tools, the Web Scraper is on the last tab, click, and then select the "Create Sitemap" menu, click " Create Sitemap" option.

First enter the URL of the website you want to crawl, as well as the name of the crawl task you customized, for example, the name I took is: xiniulevel, and the URL is: http ://www.xiniudata.com/industry/level

The second step is to create a grab node

I want to grab the first-level label and the second-level label, so click in and just create it Click “Add new selector” to enter the configuration page of the grab node selector, and click “Select" button, then you will see a floating layer

At this time, when you move the mouse into the webpage, it will automatically hover a certain one. The position is highlighted in green, . At this time, you can click on a block you want to select, and you will find that the block turns red. If you want to select all blocks at the same level, you can continue to click on the next adjacent block At this time, the tool will select all blocks of the same level by default, as shown in the following figure:

We will find that the text input box of the floating window below is automatically filled with the XPATH of the block Path, and then click "Done selecting! "End the selection, the floating box disappears, and the selected XPATH is automatically filled into the Selector line below. In addition, be sure to select "Multiple" to declare that you want to select multiple blocks. Finally, click the Save selector button to end.

The third step is to obtain the element value

After completing the creation of the Selector, return to the previous page, you will find that there is an extra line of the Selector table, and then you can directly click in the Action Data preview, view all the element values you want to get.

The part shown in the figure above is that I have added a first-level label and In the case of two Selectors in the secondary label, the content of the pop-up window that clicks on the Data preview is actually what I want, just copy it directly to EXCEL, and there is no need for too complicated automated crawling.

The above is for Web Scraper A brief introduction to the usage process. Of course, my usage is not completely efficient, because every time I want to get the second-level tags, I have to manually switch the first-level tags, and then execute the grabbing instructions. There should be a better way, but for me That’s enough. This article mainly wants to popularize this tool with you. It is not a tutorial. More functions should be explored according to your needs~

How about

, does it help you? Looking forward Share your message with me~