Editor | Cabbage Leaf

CEO Nigel Toon said that although it is best known for mainframe computer hardware, Graphcore has begun to compete with Nvidia for the software ecosystem.

Toon told the media that software is at the heart of the huge challenges posed by the growing problem of artificial intelligence , while hardware, while not trivial, is in a sense secondary.

Toon said: "You can build all kinds of fancy hardware, but if you can't really build software that can translate a person's simple description ability into hardware, then you can't really generate solutions."

The point he wanted to emphasize It's a software factor. Specifically, the ability of Graphcore’s Poplar software, which converts programs written on AI frameworks like PyTorch or TensorFlow into efficient machine code.

Toon believes that, in fact, the act of translation is the key to artificial intelligence. No matter what hardware you build, the challenge is: how to translate what a PyTorch or TensorFlow programmer is doing into what an transistor can do.

A common notion is that AI hardware is all about accelerating matrix multiplication , which is the building block of neural network weight updates. But, fundamentally, it's not.

"Is it just matrix multiplication, or do we need just convolution , or do we need other operations? Actually, it's more about the complexity of the data," he said.

Toon said that a large neural network, such as GPT-3, is "true associative memory", so connections between data are essential, and the movement of things in and out of memory becomes a computational bottleneck.

Toon is very familiar with this connection problem. He spent 14 years at programmable chip maker Altera, which was later acquired by Intel. The programmable logic chip, called "FPGA," connects its computer modules, called cells, to complete each task by blowing fuses between them.

He explained that "all the software" for FPGAs is about how to take a graph, known as a netlist, or RTL, and translate it into interconnects inside the FPGA.

Such software tasks become very complex.

"You build an interconnect hierarchy inside the chip to try to do this, but from a software perspective, mapping the graph to the interconnect is an NP-hard problem," he said, referring to computational complexity "non-deterministic polynomial time".

Because it's about translating the complexity of connections into transistors, "it's really a graph problem, which is why we named our company Graphcore," Toon said. In general, a graph is the sum of the interdependencies between different computational tasks in a given program.

"You have to start with a computer science approach, which is going to be a graph, and you need to build a processor to process the graph, do highly parallel graph processing."

"We build the software on top of that, and then we build the processor. "He said.

This means that the hardware only serves the software.

"Computers follow data structures." Toon argued, "It's a software problem."

This was Toon's chance to riff on Nvidia's CUDA software, which holds immense power in the world of AI.

“It’s interesting: a lot of people say CUDA is an ecosystem that in some ways prevents others from competing,” Toon observed.

"But what you misunderstand is that no one is programming in CUDA, no one wants to program in CUDA, people want to program in TensorFlow and now PyTorch, and then JAX - they want a high-level construct," he said, referring to There are various open source development libraries built by Meta and Google.

“All of these frameworks are graph frameworks.” He observed, “What you are describing is a fairly abstract graph where at the core of each element of the graph are large operators.

Toon noted that Nvidia "has built an amazing set of libraries to translate high-level abstractions that programmers are familiar with - that's what Nvidia does, not necessarily CUDA."

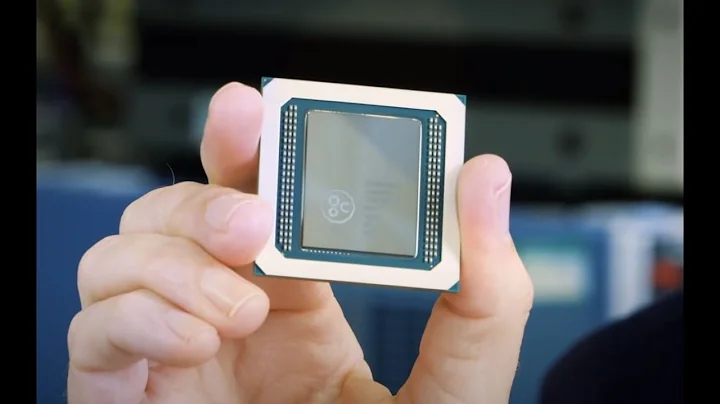

Enter Graphcore's competitor Poplar, which draws from PyTorch and others The product translates into what he considers Graphcore's more efficient hardware. Poplar takes apart computational graphs and transforms them into whatever gates are in Graphcore hardware today, and whatever will replace them tomorrow.

There is skepticism, however, about Graphcore or the many other young up-and-comers like Cerebras Systems and SambaNova competing with Nvidia on the libraries Toon mentioned.

In an April editorial, Linley Gwennap, editor of the famed Microprocessor Report, wrote that "software, not hardware" remains the problem. Gwennap believes that time is fading for Graphcore and others to close the gap as Nvidia continues to get better with hardware improvements like Hopper.

Don’t skeptics like Gwennap appreciate the progress of Poplar software?

"This is a journey." Toon said, "If you were in contact with Poplar two years ago, you would say, it is not good enough; if you are in contact with Poplar now, you will say, it is actually not bad."

"Two years later, people Say, wow, this allows me to do things that I can't do on GPU ”

Toon asserts that the software is already its own ever-expanding ecosystem. “Look at the ecosystem we’ve created around Poplar, like PyTorch Lightning, PyTorch Geometric,” two extensions of PyTorch ported to Poplar and Graphcore IPU chips.

"It's not just TensorFlow, it's a complete suite." He said, "TensorFlow is suitable for AI researchers, but it is not an environment where data scientists , individuals or large enterprises can come and play."

Practitioners and scientists In contrast, accessible tools are needed. "We use Hugging Face, Weights and Biases" among other machine learning tools, he noted. "There are a lot of other libraries coming out, and some companies are building services on top of the IPU," and, "MLOps has been ported for use with Poplar."

He said that compared to Cerebras and other competitors, Graphcore "is building on Software ecosystems are way ahead of the curve in terms of creating things that are easy to use and accessible to people."

Toon insists that, in fact, it comes down to a software duopoly. "If you look at anyone else, even the big companies, no one else has an ecosystem like this other than us and Nvidia."

At the same time, he claimed that Nvidia's hardware advancements are not because Nvidia's design freedom is restricted by its own Limitations of success. "What is Nvidia doing? They added Tensor cores and now they've added Transformer cores - they can't change the base core of the processor because if they did, all the libraries would have to be thrown away."

He claimed that although Graphcore still lags behind Nvidia in most benchmarks in the MLPerf industry test suite, but the combination of Poplar and IPU design offers measurable advantages in specific situations where it can be considered thoughtfully.

"For example, on some models, such as graph neural networks, we see performance that is five to 10 times that of Nvidia-based machines," he said, "because the infrastructure data structures we built inside the IPU are incompatible with this sparse Computations are more consistent across graph types."

Poplar software also achieves two to three times speed improvements in running Transformer models by finding clever ways to parallelize graph elements, he said.

Software is the battlefield, and the only way Nvidia can truly compete is that artificial intelligence itself is still evolving. Toon maintains that there is a lot of room for AI programs to get larger, which would limit computing power.

And the fundamental problem of cracking the code of human cognition is still far away.

First of all, programs do keep getting bigger.

Today's largest artificial intelligence model, such as Nvidia and Microsoft's Megatron Turing-NLG, which is a natural language generation model derived from the Transformer innovation in 2017, has 5 trillion parameters, or weights, which are processed in the neural network Adjusted elements, similar to synapses in real human neurons.

Some, including Cerebras, have pointed to a future of trillions or even quadrillions of parameters, and Toon agrees.

"As the number of parameters increases, so does the amount of data," Toon said. "The amount of computation increases multiples of both parts, which is why these large GPU farms are growing."

100 Trillion Numbers is a magic target because it is thought to be the number of synapses in the human brain, so it serves as a benchmark of sorts.

There's obviously no debate about the bigger and bigger problem, given that Graphcore and Nvidia and everyone else are building increasingly powerful machines for it.

Toon was interested in the second point, however, the computer science question of whether anything useful could be accomplished with all of this, and whether it could approach human intelligence.

“The challenge around this is, you know, if you have a model with 100 trillion parameters, is it going to become as smart as a human?” Toon said.

It's not just a matter of throwing transistors at the problem, it's a matter of designing the system.

"You know, do we really know how to train it?" Meaning, once the neural network has 100 trillion weights, train it. "Do we know how to feed it the right information? Do we know how to build this model so that it actually matches human intelligence, or will it be so inefficient despite having more parameters?"

In other words In other words, "Do we really know how to build a machine that matches the intelligence of the brain?"

One answer he provided was specialization. A model with a quadrillion parameters may be very good at something narrowly defined. “In a system like [DeepMind’s gaming algorithm] Atari, you have enough constraints to understand that world,” Toon said.

Similarly, "Maybe we can build enough understanding of, for example, how cells work, how DNA is converted into RNA and then into proteins, so that you can have a reinforcement learning system that uses this understanding to solve problems , like, okay, so how do I fold the protein so that it binds to this cell, and I can communicate with, say, this cell is a cancer ,” Toon mused. Add a drug to the protein and it could cure cancer."

"It would be a bit like DeepMind building the Atari game and turning it into Superman, a superhuman system that could kill cancer - it would be professional."

He suggested , another approach is “a more general understanding of the world,” similar to how human infants learn, through “exposure to large amounts of data about the world.” Toon said the 100 trillion synapses problem will be one of building "hierarchies."

"Humans build a hierarchy of understanding of the world," he said, and then they "plug in" by filling in the gaps. "You use what you know to extrapolate and imagine," he said.

"Humans are very bad at extrapolation; we're better at interpolation, and you know, there's something missing - I know, I know, it's somewhere in the middle."

Toon's thinking about hierarchies and that This was echoed by some theorists in the field, including Yann LeCun, Meta's chief scientist for AI, who has talked about building hierarchies of understanding in neural networks. Toon said he agrees with some of LeCun's ideas.

Toon said that from this perspective, the challenge of artificial intelligence becomes "How do you build a large enough understanding of the world that allows you to do more interpolation rather than extrapolation?"

He believes that this challenge will be highly "Sparse" data, updating parameters in small subsequent chunks of data instead of massive retraining on all data.

“Even within the specific things you update the world on, you may have to touch on different points in your understanding of the world,” Toon explains. "It may not be neatly concentrated in one place, the data is very messy and very sparse."

From a computational perspective, "you end up with a lot of different parallel operations." He said, "It's all very sparse, Because they are dealing with different pieces of data."

Both ideas, namely interpolation and the more specific cancer killer model, are consistent with those proposed by Graphcore's CTO Simon Knowles, who talked about "extracting" a more general, cancer-killer model. Very large neural network to something specific.

Both of these ideas seem to fit the concept of poplar software as key functionality. If new pieces of data are sparse, filling gaps, and must be fetched from multiple locations in Lenovo memory and operated across multiple graphics, then Poplar acts as a kind of traffic police, distributing such data in parallel between the IPU chips and mission plays an important role.

Despite making this case, Toon is by no means ideological. He noted that no one has yet determined the answer. "I think there are different philosophies and different ideas about how it works, but no one quite knows. That's what people are exploring." When will

answer all the deep questions? Probably not anytime soon.

"The amazing thing about AI is that we've been away from AlexNet for ten years and we still feel like we're exploring," he said, referring to the neural network that excelled in the ImageNet competition in 2012 and that brought AI to the forefront. Deep learning forms brought to the forefront.

"I always use the analogy of computer games." Toon said he wrote a Star Wars game for his first computer, a 6502 kit from a "long-forgotten" computer manufacturer. "In terms of the development of artificial intelligence, we may still be in the Pac-Man stage, we haven't entered the three-dimensional game yet," he said.

On the road to 3D gaming, “I don’t think there will be an AI winter,” Toon said, referring to the many times funding has dried up and the industry has collapsed over the decades.

"The difference today is that it works, it's real," he said. In previous eras, with AI companies like Thinking Machines in the 1980s, "It just didn't work, we didn't have enough data, we didn't have enough compute."

"Now, it's clear that it works, there's clear evidence, it "It brings tremendous value," Toon said. "People are building entire businesses. I mean, ByteDance and TikTok are fundamentally an AI-driven company, but it permeates the entire technology field and enterprise. How fast.”

The battle between tech giants, such as TikTok and Meta’s Instagram, can be seen as an artificial intelligence battle, an arms race to have the best algorithms.

With $730 million in venture funding, Graphcore certainly has enough money to survive any winter. Toon declined to provide information or Graphcore's revenue.

"We have money, we have a team," he said. "I think you can always have more money and a bigger team, but you have to work within the constraints that you have." Graphcore has 650 employees.

Currently, the winter of artificial intelligence is not a challenge. Sell the superior software combination of Poplar and IPU to your customers. This sounds like an uphill battle against giant Nvidia.Meta, the owner of

Facebook, recently announced a “research supercomputer” for AI and Metaverse, based on 6,080 Nvidia GPUs. It seems like people just want more and more of Nvidia.

"I think that's the challenge we face as a business, can we change people's hearts and minds," Toon said.

Company official website: https://www.graphcore.ai/

Related reports: https://www.zdnet.com/article/the-future-of-ai-is-a-software-story-says-graphcores- ceo/