Author: compass from the back-end technology: back-end technology compass

0 Overview

will learn through this article The following content:

- I/O multiplexing definition and background Realize the ET mode and LT mode of

- epoll

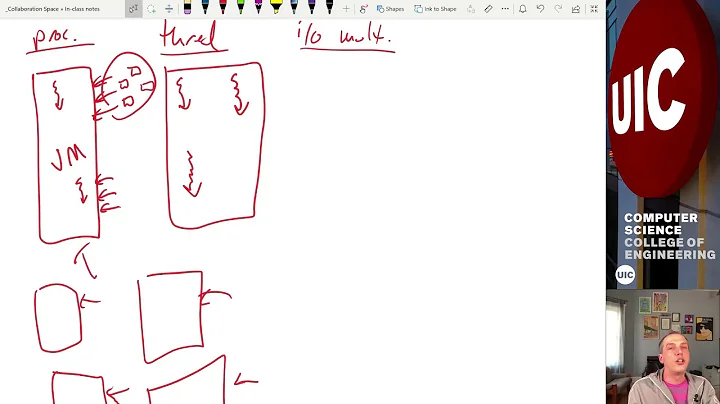

1. Multiplexing technology and I/O multiplexing

- Multiplexing Concept

Multiplexing is not a new technology but a design idea. There are frequency division multiplexing, time division multiplexing, wavelength division multiplexing, code division multiplexing in communication and hardware design. There are many scenes reused in daily life, so don’t be confused by professional terms.

In essence, reuse is to solve the imbalance of limited resources and too many users, and the theoretical basis of this technology is the releasability of resources.

- Releasability of resources

Take a real life example:

Unreleasable scene : Ventilator in ICU ward With limited resources, once occupied by the patient and before being out of danger, it is impossible to give up the occupation, so it is impossible for several patients with the same situation to take turns.

Releasable scene : For some other resources, such as medical staff, multiple patients can be monitored at the same time. In theory, there is no scenario where a patient occupies medical staff resources without releasing them.

- Understand IO reuse

The meaning of I/O : The IO often referred to in the computer field includes disk IO and network IO. The IO multiplexing mentioned mainly refers to network IO. In Linux, everything is a file, so network IO is often represented by file descriptor FD.

The meaning of multiplexing : So what should these file descriptors FD be reused? In the network scenario, the task processing thread is reused, so the simple understanding is that multiple IOs share one thread.

IO multiplexing feasibility : The basic operations of IO request include read and write. Due to the nature of network interaction, there must be waiting. In other words, the read and write of FD in the entire network connection alternately , Sometimes readable and writable, sometimes idle, so IO multiplexing is available.

In summary, IO multiplexing technology is to coordinate multiple resource-releasing FD alternately shared task processing threads to complete communication tasks, and achieve multiple fd corresponding to one task processing thread.

In real life, IO reuse is like managing hundreds of sheep by a border herd:

- IO reuse design principles and Background

The efficient IO multiplexing mechanism must meet: the coordinator consumes the least system resources, minimizes the waiting time of FD, maximizes the number of FDs, minimizes the idleness of task processing threads, how fast and better Complete tasks, etc.

In the original period when the network concurrency was very small, even if the network request was processed per req per process, it could meet the requirements. However, as the network concurrency increases, the original method mustWill hinder progress, so it stimulates the realization and promotion of IO reuse mechanism.

2. IO multiplexing tools in Linux

In Linux, select, poll, epoll, etc. have appeared successively. FreeBSD's kqueue is also a very good IO multiplexing tool, the principle of kqueue and epoll Very similar, this article takes the Linux environment as an example, and does not discuss too many implementation mechanisms and details of select and poll.

- Trailblazerselect

select appeared about in early 2000, and its external interface definition:

04a7

As the first IO multiplexing system call, select uses a macro definition function to fill fd according to the bitmap principle. The default size is 1024. Therefore, if the value of fd is greater than 1024, there may be problems. See the official warning:

That is to say, when the value of fd is greater than 1024, it will be uncontrollable. The official recommendation is not to exceed 1024, but we cannot control the absolute value of fd. We have done this before. After some research, the conclusion is that the system has its own strategy for the allocation of fd, which will most likely be allocated within 1024. I don't fully understand this, but just mention this pit.

Existing problems:

- The number and value of coordinated fd do not exceed 1024. High concurrency cannot be achieved.

- Use O(n) complexity to traverse the fd array to view the fd. Low read and write efficiency

- involves a large number of kernel and user mode copies and consumes a lot

- Each time the monitoring is completed, it needs to be re-introduced again and the operation is redundant by event

In summary, select The IO multiplexing is realized in a simple way, and the concurrency is increased to the maximum K level, but the cost and flexibility of completing this task need to be improved. In any case, select as a pioneer has a huge push for IO reuse, and indicates the direction of subsequent optimization, do not ignorantly accuse select.

- The successor epoll

epoll first appeared in the 2.5.44 kernel version, and subsequently optimized the code in the 2.6.x version to make it more concise, successively In the face of doubts from the outside world, some settings were added to solve the hidden problem in the follow-up, so epoll has a history of more than ten years.

has not introduced epoll in the third edition of "Unix Network Programming" (2003), because epoll has not yet appeared in that era. The book only introduces select and poll, and epoll solves the problems in select one by one. Simply put, the advantages of epoll include:

- There is no limit to the number of fd (of course this is also solved in poll)

- Abandoned the bitmap array and implemented a new structure to store multiple event types

- No need to copy fd repeatedly, add and discard as you use it, delete and delete

- Use event-driven to avoid polling to view readable and writable events

In summary, after the emergence of epoll, the concurrency has been greatly increased. For C10K problems Easily deal with it, even if real asynchronous IO appears in the future, it has not (temporarily not) shaken the status of epoll, mainly because epoll can solve tens of thousands of hundreds of thousands of concurrency, which can already solve most of the current scenarios. Asynchronous IO is excellent, but programming is more difficult than epoll. Under the trade-off, epoll is still very productive.Vitality.

3. The basic realization of epoll

- epoll's api definition :

04a2d#38e#epoll_create is to create a series of epoll-related structures in the kernel area, and return a handle fd to the user mode. The subsequent operations are based on this fd. The parameter size tells the kernel the size of the elements of this structure, similar to The vector dynamic array of stl, if the size is not suitable, it will involve replication and expansion, but it seems that the size is not very useful after the 4.1.2 kernel;

- Popular description:

The above description may be a bit abstract, but it is actually very easy to understand. Take a real example:

- epoll_create scene :

- In the first week of the university, you are the class leader You need to help the whole class to collect related items. You tell the staff in the student office that I am the monitor of the xx class of xx college. At this time, the staff confirms your identity and gives you the voucher. You need to do everything later (That is, call epoll_create to apply for the epfd structure from the kernel, and the kernel returns the epfd handle for you to use);

- epoll_ctl scene : 2 cfb7# You take the voucher and start working in the working hall, the sorting office staff said that the class leader, please record all the student booklets and the things that need to be handled, so the class leader starts to write in each student handbook separately Corresponding things need to be done:

- Li Ming needs to open the laboratory permission, Sun Daxiong needs to apply for a swimming card...In this way, the squad leader wrote it all and gave it to the staff (that is, tell the kernel which fd needs What operations to do);

- epoll_wait scene :

- You are waiting in front of the receiving office with your voucher. At this time, the broadcast is calling xx squad leader and your grandson big bear’s swimming card is ready soon. Receipt, Li Ming’s laboratory permission card is completed, come quickly... and the classmate's affairs are not completed, so the monitor can only continue (that is, call epoll_wait to wait for the readable and writable event feedback from the kernel to occur and process);

- OfficialDEMO

You can see the official demo through man epoll:

0da8a2#

0daa3a#

In epoll_wait, it is necessary to distinguish whether it is the new connection event of the main listener thread fd or the read and write request of the connected event, and then handle them separately.

4. epoll's bottom-level implementation

epoll bottom-level implementation of the two most important data structures: epitem and eventpoll.

can simply think that epitem corresponds to the fd of each user mode monitoring IO, eventpoll is the structure created by the user mode to manage all monitored fd, the detailed definition is as follows:

- Underlying call process

epoll_create will create an object of type struct eventpoll and return it Corresponding to the file descriptor, then the application will rely on this file descriptor when using epoll in the user mode, and the eventpoll type object is further obtained through the file descriptor inside epoll, and then the corresponding operation is performed to complete the user mode And the core state runs through.

epoll_ctl bottom layer mainly calls epoll_insert to implement operations:

- creates and initializes a strut epitem type object, completes the association between the object and the monitored event and the epoll object eventpoll;

- changes the struct epitem type The object is added to the red-black tree of the epoll object eventpoll for management;

- adds the struct epitem type object to the waiting list of the target file corresponding to the monitored event, and registers the callback function that will be called when the event is ready, In epoll, the callback function is ep_poll_callback();

- ovflist is mainly for transient processing. For example, when the ep_poll_callback() callback function is called, it is found that the ovflist member of eventpoll is not equal to EP_UNACTIVE_PTR, indicating that the rdllist list is being scanned, and it will be ready at this time The epitem corresponding to the event is added to the ovflist linked list for temporary storage, and the elements in the ovflist linked list are moved to the rdllist linked list after the rdllist linked list is scanned;

- shows the relationship between the red-black tree, double-linked list, and epitem :

Note: rbr stands for rb_root, rbn stands for rb_node. The definition in the kernel is given above

7e00#2cfb#2cfb#epoll_wait data copyCommon wrong view: When epoll_wait returns, for the ready event, epoll uses the shared memory method, that is, both the user mode and the kernel mode point to the ready linked list, so It avoids memory copy consumption. The opinions copied and copied online

about epoll_wait using shared memory to speed up the data interaction between user mode and kernel mode and avoid memory copy views, which has not been confirmed by the 2.6 kernel version code , And the realization of this copy is like this:

5.ET mode and LT mode

- Simple understanding

defaults to LT mode, LT supports blocking and non-blocking sockets, ET mode only supports non-blocking sockets, its efficiency is higher than LT mode, and LT mode is more secure.

In both LT and ET modes, you can use the epoll_wait method to obtain events. After the events are copied to the user program in LT mode, if they have not been processed or have not been processed, they will be fed back to the user program in the next call. It can be considered that the data will not be lost and will be repeatedly reminded; if it is not processed or not processed in

ET mode, the user program will not be notified next time, thus avoiding repeated reminders, but strengthening the user program Reading and writing requirements;

- In-depth understanding

The simple understanding above will be mentioned in any article on the Internet, but when LT and ET are really used, it is still There is a certain degree of difficulty.

- LT's read and write operations

LT is relatively simple for read operations. There is a read event to read. There is no problem with reading more and less, but write is not so easy, generally The sending buffer of the socket must be dissatisfied when it is in the idle state. If the fd is always monitoring, it will always notify the write event, which is annoying.

Therefore, it must be ensured that when there is no data to send, the write event monitoring of fd should be deleted from the epoll list, and then added back when needed, and so on.

There is no free lunch in the world. It is impossible to always remind at no cost. The excessive reminder corresponding to write requires the user to add it as needed, otherwise the user will always be reminded of writable events.

- ET's read and write operations

fd is readable and returns a readable event. If the developer has not read all the data, epoll will not notify the read event again. That is to say, if all the data is not read, epoll will no longer notify the socket read event. In fact, it is easy to read all the time.

If the sending buffer is not full, epoll notifies the write event. Until the developer fills the sending buffer, epoll will not notify the write event the next time the sending buffer changes from full to not full.

ET mode will only notify when the status of the socket changes, that is, when the read buffer changes from no data to data, the read event is notified, and the send buffer changes from full to not full to notify the write event.

- An interview question

Use the LT horizontal trigger mode of the Linux epoll model. When the socket is writable, it will continuously trigger the socket writable How to deal with events? Tencent interview question

circulated on the Internet has a more in-depth investigation of LT and ET, which verifies the LT mode write problem mentioned above.

Common practice :

When data needs to be written to the socket, add the socket to epoll and wait for a writable event. After receiving the socket writable event, call write() or send() to send data. When all the data is written, remove the socket descriptor from the epoll list. This practice requires repeated addition and deletion.

Improved practice :

When writing data to the socket, directly call send() to send. When send() returns the error code EAGAIN, the socket is added to epoll, and the socket is sent after waiting for a writable event Data, all data is sent, and then move out of the epoll model. The improved approach is equivalent to thinking that the socket is writable most of the time.oll helps monitor.

The above two methods are to fix the frequent notification of write events in LT mode. In essence, it can be done directly in ET mode and does not require patching of user-level programs.

- Thread starvation problem in ET mode

If a socket continuously receives a lot of data, in the process of trying to read all the data , It may cause other sockets not to be processed, thereby causing hunger problems.

Solution: Maintain a queue for each prepared descriptor, so that the program can know which descriptors have been prepared but have not been read, and then the program will read it regularly or quantitatively. After reading, it will be removed until the queue is empty. This ensures that each fd is read and no data will be lost. The process is shown in the figure:

- EPOLLONESHOT set

After the A thread reads the data on a socket, it starts to process the data. At this time, there is new data on the socket to read, and the B thread is awakened to read the new data, causing 2 When threads operate on a socket at the same time, EPOLLONESHOT guarantees that a socket connection is processed by only one thread at any time.

- Choice of the two modes

Through the previous comparison, we can see that the LT mode is safer and the code writing is clearer, but the ET mode is a high-speed mode, and it is dealing with high-speed Concurrency scenarios are more effective when used properly. The specific choice depends on your actual needs and team code capabilities. If the concurrency is high and the team level is high, you can choose the ET mode, otherwise the LT mode is recommended.

6. The shocking group problem of epoll

The shocking group problem of accept in the 2.6.18 kernel has been solved, but the shocking group problem still exists in epoll. It means that when multiple processes/threads call epoll_wait, they will block and wait. When the kernel triggers a read-write event, all processes/threads will respond, but in fact only one process/thread actually handles these events.

Before epoll officially fixed this problem, Nginx, as a well-known user, used a global lock to limit the number of processes that can monitor fd at a time. There is only one process that can be monitored at a time, which was later added in the Linux 3.9 kernel. The SO_REUSEPORT option implements kernel-level load balancing. Nginx 1.9.1 version supports the new feature of reuseport, thus solving the problem of shocking groups.

EPOLLEXCLUSIVE is a newly added epoll logo in the Linux 4.5 kernel in 2016. Ngnix added the NGX_EXCLUSIVE_EVENT option after 1.11.3 to support this feature. The EPOLLEXCLUSIVE flag will ensure that only one thread will be awakened when an event occurs, so as to avoid the cluster problem caused by multiple listening.